High-Stakes – Under the bonnet of end-point assessment

In the coming weeks, we’ll be releasing a series of articles taking you through the end-point assessment methods used in new apprenticeship assessment plans, and some of the practical issues to consider.

Welcome to Article #1, here’s a look under the bonnet of “high-stakes” end-point assessment and how it’s starting to shape up.

Apprenticeship end-point assessment is happening. Every apprentice aiming for one of the new apprenticeship standards will face a formal end-point assessment at the end of their programme.

The differences between the continuous assessment of a framework-based apprenticeship and the end-point assessment for a standard are clear and are starting to be understood.

The rigour of the end-point assessment is starting to be valued by employers, even though many current apprenticeship providers are not yet convinced.

At the same time, the role of the apprenticeship trainer-assessor is changing radically. In the old world, the focus is on training, the assembly of the portfolio and its continuous assessment.

In the new, any on-programme assessment will be focused on the progress the apprentice is making, on their success in passing through the new Gateway, and ultimately on their preparation for end-point assessment.

(The Gateway is the set of requirements laid down in each apprenticeship standard that have to be met before an apprentices can attempt end-point assessment.)

End-point assessment is “high stakes assessment”

End-point assessment is tough, it’s different and it’s high-stakes.

What I mean is, that unlike framework-based apprenticeships, where there’s plenty of time to hone the portfolio, success or failure at the end-point can have fundamental bearing at the start of a person’s career or on their progression.

Also, the fact that the stakes are high could lead to misdirected effort to “train for the test” and hot-house apprentices as they approach the end of their programme.

And the apprentice will be assessed by a stranger (the independent end-point assessor) rather than by the trainer-assessor that they have built a relationship with continuously over what could be a programme of three years or more.

On the plus side we are seeing in the early tranches of end-point assessment, in industries such as digital and energy, that employers are truly valuing the rigour of the process and are feeling in many cases that apprentices are much better prepared and job ready.

As more end-point assessments are done, it is hoped that this “parity of rigour” as opposed to “parity of esteem” will place apprenticeships at least alongside academic routes for employers, labour market entrants, and their parents.

Employers as assessment designers

Trailblazer employer groups have been writing assessment plans over the last five years. The guidance they have been following and their understanding of what constitutes rigorous, valid and robust assessment are still evolving.

There are many options and combinations of assessment methods that may be chosen for each assessment plan. How these methods are chosen and how they put together to infer competence is down to the assessment plan designers, in other words to the employers.

Employers understand what it takes to do a job well. They are not assessment design specialists and so assessment plans developed over the last five years are variable in design, quality, usability, and affordability.

Many plans are very good; they are not all great; but they do have one thing in common: both the employers and the government have signed them off.

So, it’s now the job of the new end-point assessment organisations (EPAOs) and the end-point assessors they employ, to deliver rigorous, valid, robust and fair assessments for every apprentice, consistently.

Under the bonnet of assessment plans

During our work with the employer groups, apprenticeship providers, and the new EPAOs, we’ve had to get under the bonnet of many assessment plans, looking at how they can be put into practice and how assessors can be prepared to deliver consistency.

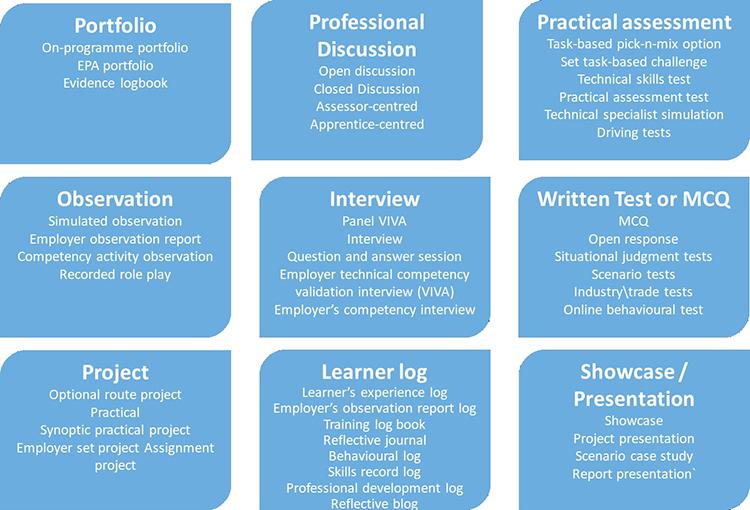

We’ve unpacked that and have come up with a more detailed breakdown of the possibilities for assessment methods.

We’ve also conducted an analysis of the methods used in assessment plans. In the 208 published assessment plans a total of 637 assessment methods have been deployed. That around 2.8 per plan.

The breakdown looks like this:

In the coming weeks, we’ll be releasing a series of articles taking you through each assessment method in turn, looking at some of the standards they are used in; giving you an overview of how each assessment method works; how reliable, valid and robust they can or should be; as some of the practical issues you may encounter as you use them.

We’re very keen to hear from assessors who are using these methods in their end-point assessments every day.

We would love to feature your experiences of how end-point assessment is working in practice.

Let us know if you have any comments at the bottom of this page.

Colin Bentwood, Managing Director (and EPA programme manager), Strategic Development Network (SDN)

To get to grips with end-point assessment in more depth, places are now available on our Level 3 Award course in Undertaking End-Point Assessment. SDN are also producing a set of recorded presentations covering the main end-point assessment methods and critical areas of practice.

These will be available at the beginning of March. Find out more here: www.strategicdevelopmentnetwork.co.uk/sdnevent

More updates and practice can be accessed on our new End-Point Assessment LinkedIn Group and through our mailing list – feel free to join!

Responses