Navigating the Future of AI Safety in Education

In this article, Jamie discusses some of the key issues around the safe and responsible development and application of emerging AI platforms in the context of education, and shares insights from some colleges that are already leading the way in this context’

Right now my experience with some of the well-known AI platforms from time to time goes a little like this. Ask a question via a prompt, receive an answer with a bold and audacious statement in it. Type a more refined prompt seeking data sources and links to verify the bold audacious statement. Receive an answer with the requested links. Click on the links and be taken to McDonald’s wrap of the day or similar random and irrelevant information (unless by chance you fancy a McDonald’s). Refine prompt further to request data sources yet again. Receive a final response saying ‘I can’t help you with that’. AI is clearly in its infancy and has a long way to go, but the potential to shape and change our world is also clear and it can also get a lot right. For educators, the arrival of AI platforms presents the familiar mix of challenges and opportunities to navigate through as digital disruption continues to accelerate.

As artificial intelligence (AI) continues to embed into ever more aspects of our lives its impact on how we learn, teach, and assess student progress is only just starting to emerge. While the potential benefits of AI in education are immense, so too are the challenges that must be addressed to ensure its safe and ethical implementation and this is one area where the UK is showing leadership.

The UK Secretary of State for Science, Innovation and Technology presented a White Paper titled ‘A pro-innovation approach to AI regulation’ on the 29th March 2023 and the UK subsequently held the AI Safety Summit at Bletchley Park on the 1st-2nd November and the shared objectives of both of these developments can be summarised as follows.

Proportionality: AI regulation should be proportionate to the risks posed by AI. This means that regulation should be targeted at the most serious risks, and it should not stifle innovation where it’s not necessary.

Transparency: AI regulation should be transparent and accountable. This means that the Government should be clear about its reasons for regulating AI, and it should provide opportunities for public consultation.

International cooperation: AI regulation should be developed in an international context. This means that the UK Government will work with other countries to develop common standards for AI regulation.

From the UK AI Safety Summit that brought together experts from Government, industry, academia and wider the output was the adoption of the ‘Bletchley Declaration’ which outlines a shared consensus on the need for international collaboration on AI safety.

Some of the Bletchley Declaration areas of focus include a commitment to examine:

The risks of frontier AI: The summit recognized the potential risks posed by frontier AI, such as its potential to exacerbate societal inequalities, undermine human autonomy, and cause harm to individuals and groups.

The need for a multi-stakeholder approach: The summit emphasised the need for a multi-stakeholder approach to AI safety, involving Governments, businesses, researchers, and civil society.

The importance of transparency and accountability: The summit stressed the importance of transparency and accountability in the development and deployment of AI systems.

The potential of AI to benefit society: The summit also recognized the potential of AI to benefit society, and called for a balanced approach that mitigates risks while harnessing AI’s transformative potential.

To promote public engagement and education: Countries will promote public engagement and education about AI to ensure that citizens are informed and empowered to participate in discussions about AI governance.

Within this context educators across the world and here in the UK have already started to use various new AI platforms in a diversity of ways and have done so both with impact and within a culture of safety. City College Plymouth for example has an AI Policy that provides a framework for the safe application and use of AI platforms for teaching and learning, while BCoT (Basingstoke College of Technology) has tested embedding Google Bard across curriculum areas with significant measurable impact.

“Some people call this artificial intelligence, but the reality is this technology will enhance us. So instead of artificial intelligence, I think we’ll augment our intelligence.” Ginni Rometty

What would you do with an extra 12 thousand years?

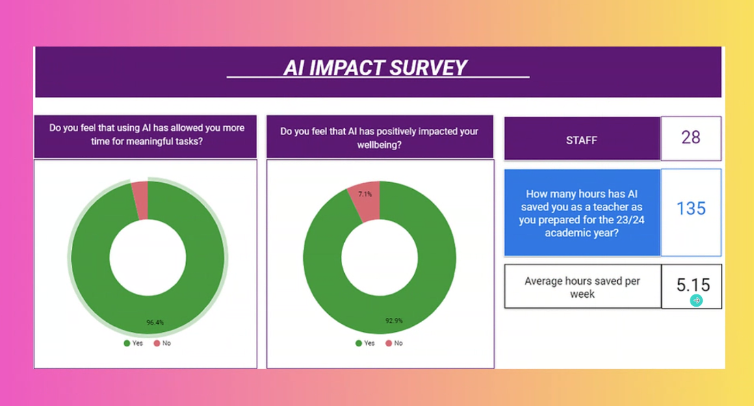

BCoT has applied Google Bard across a sample of 28 staff and has achieved a measurable impact of 5.15 hours of time saved per week. If we take this data and go on a little imaginative journey in a not at all academically rigorous way and with a huge margin of error we can imagine what the possible impact at national scale might be were educators across the UK to adopt a similar approach to BCoT.

According to the Department for Education’s Education Workforce Census 2021 survey there were approximately 565,800 teachers in the UK.

According to the 2023 National Education Union (NEU) Survey of Teachers’ Salaries, the average hourly pay for a UK teacher is £29.44. For the purpose of making a point, let’s call it £30 per hour. If we put these figures together the average financial efficiency gain per teacher could be broadly £150 a week. Across the UK that translates to £84,870,000 a week in potential efficiency gains.

If we assume 39 weeks of term time, then annually the UK education sector could realise financial efficiencies to the value of £3,309,930,000 based on these assumptions. So broadly around the £3.3 billion mark. That’s quite a lot of money.

In time, it’s 2,829,000 hours a week. 110,331,000 hours a year. That’s over 4,597,125 days a year. Or 12,594 years. What would you do with an extra twelve thousand years?

The question we should address is how will we realise these benefits? If the prize is more work to fill the 5.15 hours per week per person then I’d suggest we will have gained nothing. My contention is that technology has to do two things, be easy to use and make life better. The time we save should be used wisely in this context.

Navigating the Challenges: Bias, Fairness, and Safeguarding

Despite its transformative potential, AI also raises concerns about bias, fairness, and safeguarding in education. AI algorithms can perpetuate and amplify existing societal biases, leading to discriminatory outcomes in education if applied in a certain context.

The opaque nature of many AI algorithms can make it difficult to identify and address biases. Lack of transparency in the decision-making processes of AI-powered systems can hinder accountability and make it challenging to ensure fairness and equity in education.

Safeguarding children’s privacy and safety in the digital learning environment is also paramount. AI-powered educational tools should be designed with robust privacy protections in place, ensuring that student data is collected, stored, and used responsibly and ethically. Leading the way in this context are companies like Merlyn Mind whose AI platform is the only one that combines the best general purpose AI with proprietary large language models and safety protocols built specifically for education.

Collaboration for a Safe and Ethical Future

“Forget artificial intelligence – in the brave new world of big data, it’s artificial idiocy we should be looking out for.” Tom Chatfield

Addressing the challenges of AI safety and safeguarding in education requires a multifaceted approach that involves collaboration between educators, policymakers, researchers, and industry stakeholders.

Educators need to be equipped with the knowledge and skills to effectively integrate AI into their classrooms while upholding ethical principles. Training should focus on identifying and addressing bias in AI systems, promoting inclusive teaching practices, and ensuring data privacy and security, as well as how to make the most of the fabulous new tools at our collective disposal.

Governments and educational institutions should establish clear policies and regulations governing the use of AI in education. These policies should address data privacy, algorithmic transparency, and ethical considerations to ensure that AI is used responsibly and fairly.

Researchers should continue to explore and develop AI algorithms that are fair, unbiased, and transparent. Research should focus on developing methods for identifying and mitigating bias, improving algorithmic explainability, and enhancing data security mechanisms.

Industry partners should collaborate with educators and policymakers to develop AI solutions that are aligned with educational goals and ethical principles. This collaboration should focus on creating AI tools that promote personalization, automation, and accessibility while upholding privacy, fairness, and safeguarding. Declaration of interest here, this is what C-Learning has been focusing on for some time and our team is happy to talk to anyone interested in this area.

As we move forward we must prioritise transparency, collaboration, and ethical guidelines. We must ensure that AI is used to supplement, not replace, teachers and when deploying edtech solutions those that place the teacher at the heart of the design are likely to win. We must also safeguard student data and foster inclusive learning environments free from bias.

By embracing these principles we can harness the transformative power of AI to power human potential. Together, we can shape a learning landscape that is both innovative and safe at the same time as empowering our children with the skills of tomorrow.

By Dr Jamie Smith, Executive Chairman of C-Learning

Jamie is an Executive Chairman, Founder and Co-Founder of companies like www.safeguarding24.com and other ventures as well as a Board member to organisations including military charities and education institutions.

Jamie’s work is focused on pushing the boundaries of innovation to create a smarter and more sustainable planet.

FE News on the go

Welcome to FE News on the go, the podcast that delivers exclusive articles from the world of further education straight to your ears.

We are experimenting with Artificial Intelligence to make our exclusive articles even more accessible while also automating the process for our team of project managers.

In each episode, our thought leaders and sector influencers will delve into the most pressing issues facing the FE.

Responses