Staying safe online: New Age Appropriate Design Code

Everyone is talking about the Age Appropriate Design Code – so how will this upcoming legislation protect our children online?

After lengthy campaigning, the Information Commissioner’s Office (ICO) released their much anticipated Age Appropriate Design Code.

You may have heard about this groundbreaking initiative, that was under consultation until 31st May 2019.

But what exactly is it and why does it affect you?

Aimed at providers of social media platforms, content streaming services, online games, apps, devices, search engines and other websites, it outlines a code of practice for online services ‘likely to be accessed by children in the UK’.

Through a set of 16 robust standards it ensures that the best interests of the child are the primary condition during the design and development phase. In doing so, it paves the way for parents and children to make informed choices and exercise control.

This is vital, since many online services frequented by children were initially designed with adults in mind, well before the advent of new technologies when significant legislation around data protection and consumer rights enabled these services to bypass basic online safeguarding principles.

It is worth noting also that the code is not restricted to services specifically directed at children, but are nonetheless likely to be used by under-18s, so even if the service claims to target adults, documented evidence is required to demonstrate that children are not likely to access the service in practice.

Why am I so excited?

As educators we have come a long way in raising awareness of risk and empowering pupils, parents and carers with strategies to stay safe online as part of our duty of care.

Yet we all know that children are only as safe as the environment they occupy – their online world is a little like William Golding’s ‘Lord of the Flies’, the only difference being that here they inhabit a virtual island, unregulated and lacking in adult supervision, where they are unwittingly manipulated into forfeiting their personal information by entities masked behind the anonymity of their screens.

A recent report by the Norwegian Consumer Council, Deceived by Design, warns that many big tech companies currently employ psychologists to design ‘sticky’ environments that nudge children to spend more time online and thereby generate greater profits.

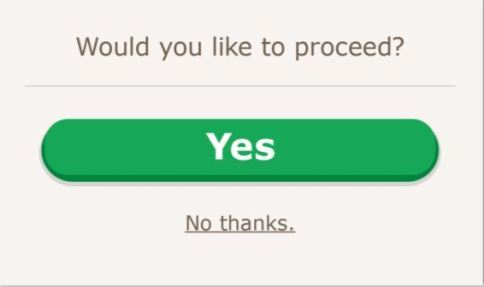

Tactics often used include the use of ‘dark’ patterns, or tricks incorporated in web and app design to encourage them to make choices they may not have intended to, like buying or signing up for something.

So last year when a group of pupil cybermentors at Greenford High School were invited to partake in a focus group by Revealing Reality, commissioned by the ICO to inform the Code’s development, I was thrilled.

Their concerns were evident from the start, ranging from a lack of clarity around default privacy settings on social media, and the adult terminology used in terms and conditions notices, to confusion about how their data was being used and indirect ways their location and personal information could be tracked.

Many also pointed out the varied strategies employed to extend their time online, such as positive feedback loops, reward cycles and forfeiting points if they paused in the middle of a game.

Some pupils also admitted compromising these concerns, believing they had to agree to certain terms at the cost of missing out on these services altogether, in order to socialise and benefit from the apps their peers used.

Seeing that their voices along with several others nationally have not only been heard but taken on board in shaping this vital piece of legislation is therefore great news.

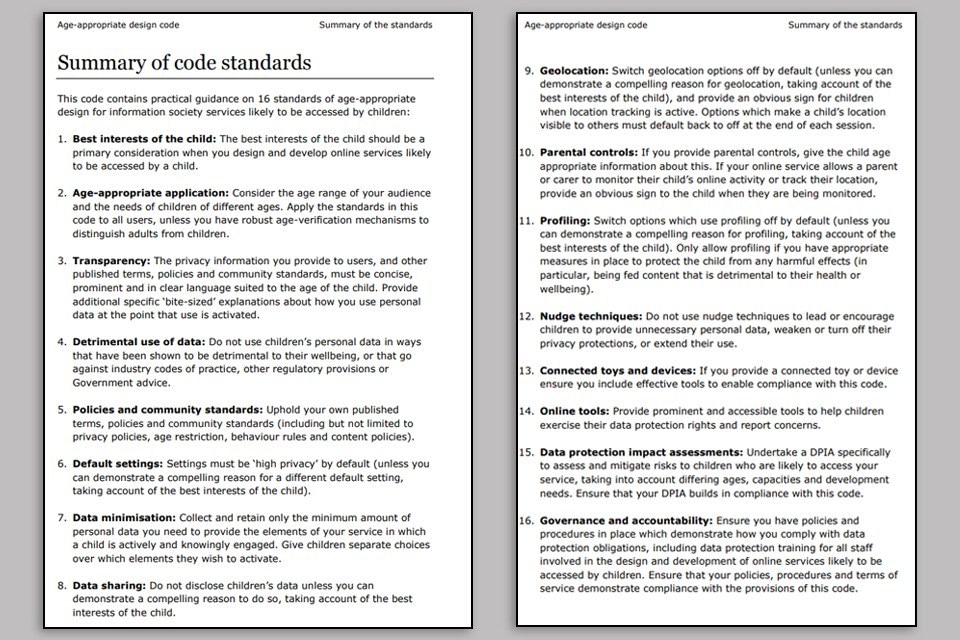

A summary of the 16 standards from the code

So what does the Code mean?

You can access the entire document here – but I’ve taken the liberty of summarising key recommendations and significant nuggets that you will find useful for your discussions with pupils and parents below.

These include, but are not limited to ensuring providers take responsibility for:

- Establishing a high privacy default setting on all sites

- Introducing robust age verification checks on platforms or treating all users as children

- Limiting how children’s personal data is collected, used and shared

- Disabling geolocation tools by default and providing anobvious sign for children when tracking is active, which must default back to off when each session is over

- Removing targeted advertising as standard, unless there is a compelling reason not to

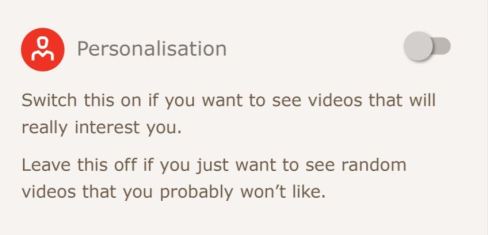

- Discouraging ‘nudge’ techniques from inciting children to engage in a certain wayand keep them online for longer. Examples include Snapchat ‘streaks’ or Facebook ‘likes’, luring them to click on preferred options through use of more prominent buttons, colours or fonts, or making it ‘difficult’ to manage their choices.Instead of driving users to share personal data, guidelines require providersto ‘nudge’ children towards healthy behaviours such as taking screen breaks

- Ensuring privacy information, terms and condition are concise, transparent andin age-appropriate language, with bite-sized explanations about how data isused at the point of access

- Requiring companies to show that all staff involved in the design anddevelopment of services likely to be used by children comply with the codeof practice.

And that’s not all – it will be statutory, with companies facing legal actionforfailing to comply, and fines of up to £18 million or 45% of annual turn over for serious breaches of data protection in line with General Data Protection Regulation (GDPR).

It’s worth noting that critics have pointed out that in treating everyone like children, the code invariably undermines user privacy by requiring the collection of credit card details or passports for every user, and is an attack on the business model of online news and many other free services, making it difficult to target advertising to viewer interests.

And whilst there are areas for clarification, I remain optimistic, and hope this has inspired you to discuss this ground-breaking code with your pupils – after all it will affect them and their insights are critical.

The safeguarding of our children online can only be effectively addressed through collective social mediation and critical thinking, focusing on the impact of online behaviour and environments.

This requires a commitment by industry to take responsibility for safer online environments through improved design and monitoring, together with an inclusive and collaborative approach to education, with the child’s best interest at the heart of this. Furthermore, as technology rapidly evolves, it is vital for policy makers to keep abreast of these developments.

Only then can we take an informed and consistent approach in addressing online risk, enabling young people to safely maximise the wealth of opportunities afforded by technology.

Mubina Asaria, Online Safeguarding Consultant, LGfL

Responses